![]()

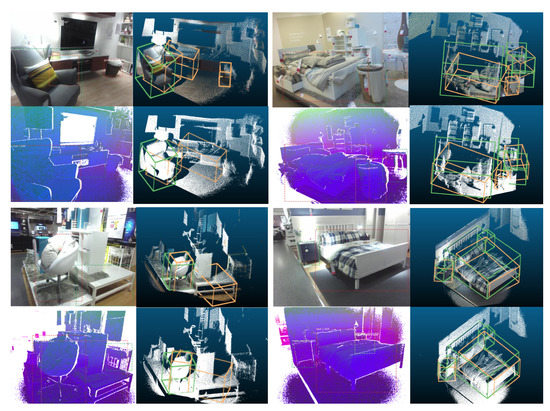

The addition of an instance segmentation module and more

experiments are presented here (Results from SUB RGBD

dataset):

This work was

partially supported by NSF Award CNS1625843 and

Google Faculty Research Award 2017 ("Classification

of urban objects in 3D point clouds").

We acknowledge the support of NVIDIA with the

donation of the Titan-X GPU used for this work.

Finally, support has been provided by CUNY PSC-CUNY

and Bridge funds.