Lectures: Tue, Thu 5:30 PM - 6:45 PM, HN C-101, In Person :)

Professor

Saad Mneimneh, HN 1090L

Office hours: Thu 10:15 - 11:15, 1:00 - 2:00 pm, or by appointment

|

Class Lectures: Tue, Thu 5:30 PM - 6:45 PM, HN C-101, In Person :) Professor Saad Mneimneh, HN 1090L Office hours: Thu 10:15 - 11:15, 1:00 - 2:00 pm, or by appointment |

Suggested books (not required)

Bayesian statistics: an introduction, Peter Lee

Bayesian computation with R, Jim Albert

Data analysis: A Bayesian tutorial, Sivia

In addition to some notes that will be provided from time to time

All the documents posted on this website, including lectures and homework assignments, are subject to copyright.

These document are the property of the author.

It is illegal to distribute these documents or post them on third party websites.

Lectures (These are from last year)

Lecture 1. Probability axioms, independence, conditioning. Read first two pages of Note 1.

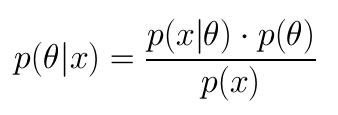

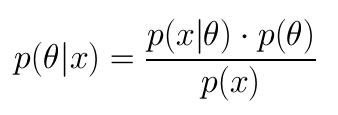

Lecture 2 More on independence, multiplication rule, Bayes rule, examples of conditioning (Bayes). Read Note 1 and first two pages of Note 2.

Lecture 3 Examples of using Bayes rule (listing outcomes, probability tree, "symbolic"), sequential (keeping history) vs one shot Bayes. Read Note 2 except for Monty Hall.

Lecture 4 More examples of using Bayes rule, finish Note 2.

Lecture 5 Random variables (discrete case), Uniform, Bionomial, Geometric, and Bernoulli RV (and PMFs), expectation, read most of Note 3.

Lecture 6 Nested expectation, variance, continuous random variable, density, independence, conditioning, and Bayes rule in the continuous setting, finish Note 3 and read Note 4.

Lecture 7 Finding densities, expectations, and conditional densities, an example of uniform random variable. Finish Note 4.

Lecture 8 Other random variables, Poisson and exponential distributions. Read Note 5 pages 1-4.

Lecture 9 The Central Limit Theorem and the normal density. Finish Note 5.

Lecture 10. Bayes with Normal densities, conjugate priors, the case of Normal-Normal. Read Sections 1, 2, and 3 in Note 6.

Lecture 11 Aplication of Bayes to the mean of two groups, improper/reference prior, mixture of priors. Read Note 6 not including Lindley's Paradox.

Lecture 12 Mixture of discrete and continuous priors, Lindley's paradox. Finish Note 6.

Lecture 13 Change of variable technique, the Chi-squared density, Gamma function, and Chi-squared test. Read first half of Note 7.

Lecture 14 Chi-squared as a prior, the unknown variance case. Read Note 7.

Lecture 15 The student distribution, t-tests, and student prior. Note 8.

Lecture 16 The beta density, as a prior and conjugate form, Laplaced rule of succession. Read Note 9 Sections 1, 2, and 3.

Lecture 17 An example Beta mixed prior. Polya's urn. Finish Note 9 (but Lecture notes are a bit more clear in terms of presentation).

Lecture 18 Markov chains. Note 10.

Lecture 19 Markov, Bayes, and Viterbi. Note 11.

Lecture 20 Viterbi, forward, and backward algorithms.

Lecture 21 Reversibility and the detailed balance equations.

Lecture 22 Rejection sampling and the Metropolis-Hastings algorithm.

Lecture 23 Gibbs sampling.

Notes

Note 1

Note 2

Note 3

Note 4

Note 5

Note 6

Note 7

Note 8

Note 9

Note 10

Note 11

Note 12

I will continue posting notes in the future...

Homework

Homework 1 Due 9/6/2022

Homework 2 Due 9/15/2022

Homework 3 Due 9/22/2022

Homework 4 Due 10/6/2022

Homework 5 Due 10/20/2022

Homework 6 Due 10/27/2022

Homework 7 Due 11/3/2022

Homework 8 Due 11/10/2022

Homework 9 Due 11/29/2022

Homework 10 Due 12/08/2022

Project (for graduate students)

(coming soon) Due 24 hours after final.

This is also when the report is due.

Grading

Homework 20%

Project 10% (take home for graduate students)

QUIZ 1 15% grad 20% undergrad

QUIZ 2 15% grad 20% undergrad

Final 30%

Report 10%, ideas: Simpson's paradox, Sleeping beauty, Naive Bayes, Jeffrey's prior, Pascal's estimation of Saturn's mass, other ideas are welcome...